The Complete Guide to Enterprise LLM Fine-Tuning: Making AI Work for Your Business

Katonic AI

Katonic AI's award-winning platform allows companies build enterprise-grade Generative AI apps and Traditional ML models

The blog outlines a 5-phase process including project setup, model selection, dataset preparation, hyperparameter configuration, and training execution using Parameter-Efficient Fine-Tuning (PEFT) with LoRA methodology. Real-world case studies demonstrate significant business benefits: a telecommunications company achieved 68% reduction in agent escalations, while a manufacturing firm improved defect detection accuracy from 54% to 78%. The platform makes enterprise-grade fine-tuning accessible without requiring deep technical expertise, delivering measurable ROI through improved performance, cost efficiency, and competitive advantage.

Here’s the thing about off-the-shelf AI models: they’re brilliant generalists but often miss the mark when

it comes to your specific business needs. You know what I mean—ChatGPT can write poetry and explain

quantum physics, but ask it about your company’s proprietary processes or industry-specific terminology,

and you’ll get generic responses that don’t quite hit the mark.

That’s where fine-tuning comes in. And honestly, it’s one of the most powerful yet underutilised tools in

enterprise AI.

Let’s talk about the elephant in the room. Most businesses deploy AI solutions only to find them underwhelming in real-world applications. A pharmaceutical company might need an AI that understands

drug discovery terminology. A legal firm requires models that grasp complex contract language. A manufacturing company needs AI that comprehends their unique quality control processes.

Generic models simply can’t deliver this level of specialisation out of the box.

Fine-tuning changes everything. It’s the difference between having a brilliant intern who knows a bit

about everything and a seasoned specialist who truly understands your business.

Think of fine-tuning as advanced corporate training for AI models. You’re taking a smart foundation

model and teaching it to excel in your specific domain without losing its general intelligence.

The process modifies selected neural network weights to optimise performance on your targeted tasks.

But here’s the clever bit: you’re not training from scratch (which would be prohibitively expensive), you’re

adapting an existing model to your needs.

At Katonic AI, we use Parameter-Efficient Fine-Tuning (PEFT) with Low-Rank Adaptation (LoRA)

methodologies. This approach maintains computational efficiency whilst delivering exceptional results

tailored to your business requirements

Our platform breaks down the fine-tuning process into five manageable phases:

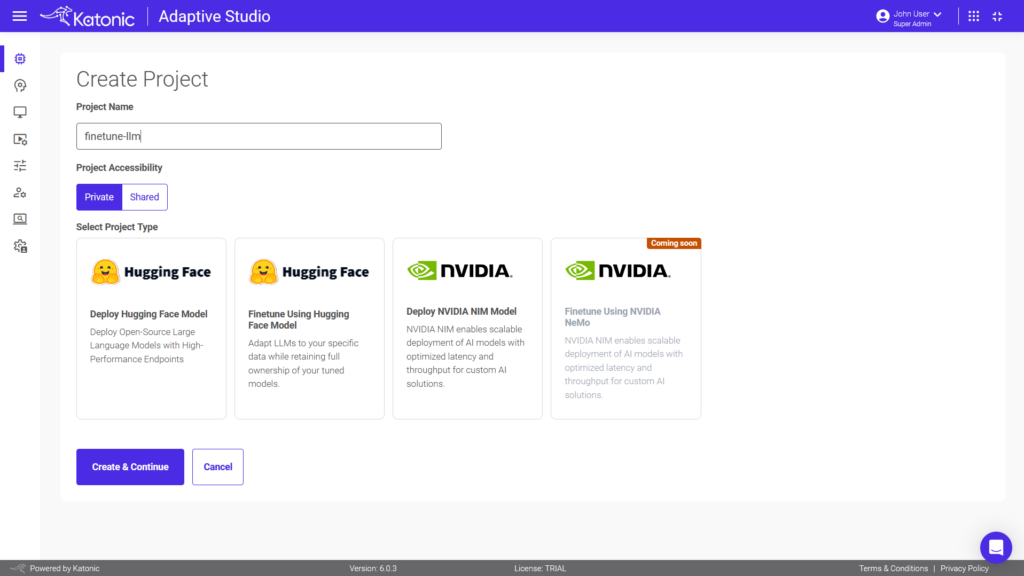

First things first—you’ll set up your fine-tuning project with proper access controls. Whether you need

private deployment for sensitive data or shared access for team collaboration, the platform

accommodates your security requirements

The key decision here is selecting “Finetune Using Hugging Face Model” which initialises our PEFT

pipeline. This preserves your model ownership rights whilst enabling full control over adaptation

parameters.

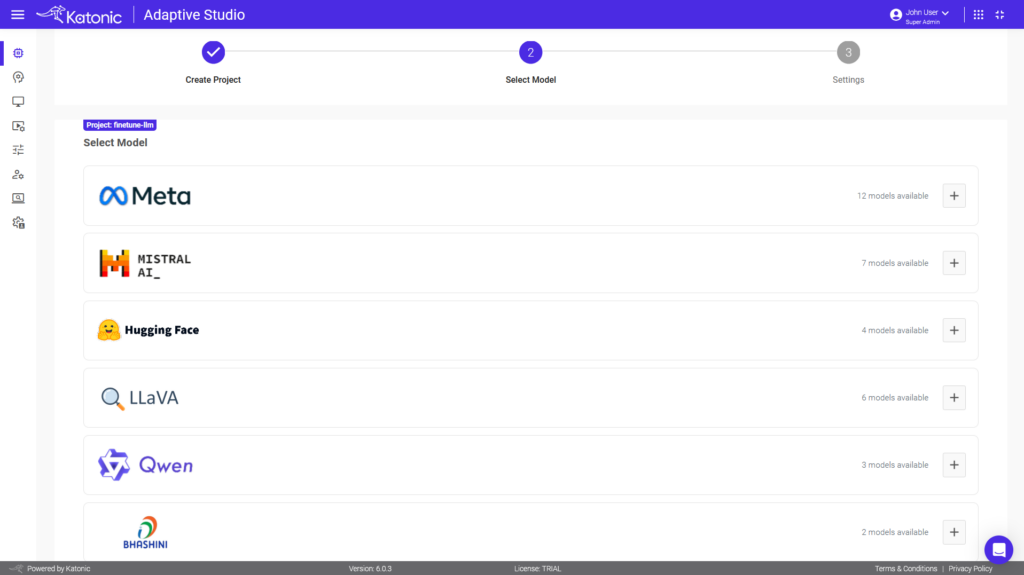

This is where strategy meets technical implementation. The Katonic platform supports multiple

transformer architectures including models from Meta, Mistral AI, WatsonX, Qwen, and others.

Your selection criteria should consider:

Here’s the practical bit: if you need a model that’s not currently available, we can add it to our base

models provided it’s supported by VLLM. This flexibility ensures you’re never locked into suboptimal

choices.

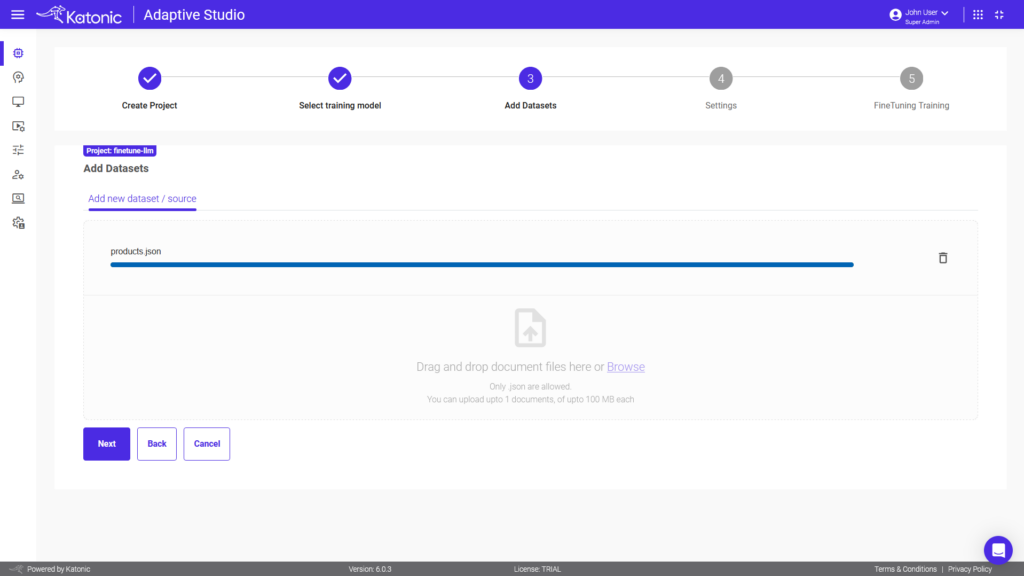

Your data is the secret sauce of effective fine-tuning. The platform accepts JSON/JSONL formats with

UTF-8 encoding, supporting both question-answering and instruction-tuning implementations.

For question-answering tasks, your data might look like:

json

"context": "Your company's specific knowledge",

"question": "Domain-specific question",

"answer": "Precise, company-relevant answer"

For instruction tuning, the format focuses on teaching the model to follow your business-specific

instructions:

json

"instruction": "Translate technical specification",

"input": "Your proprietary content",

"output": "Expected company-standard response"

The platform handles all preprocessing automatically—validation, truncation, tokenisation, and train/

validation splitting.

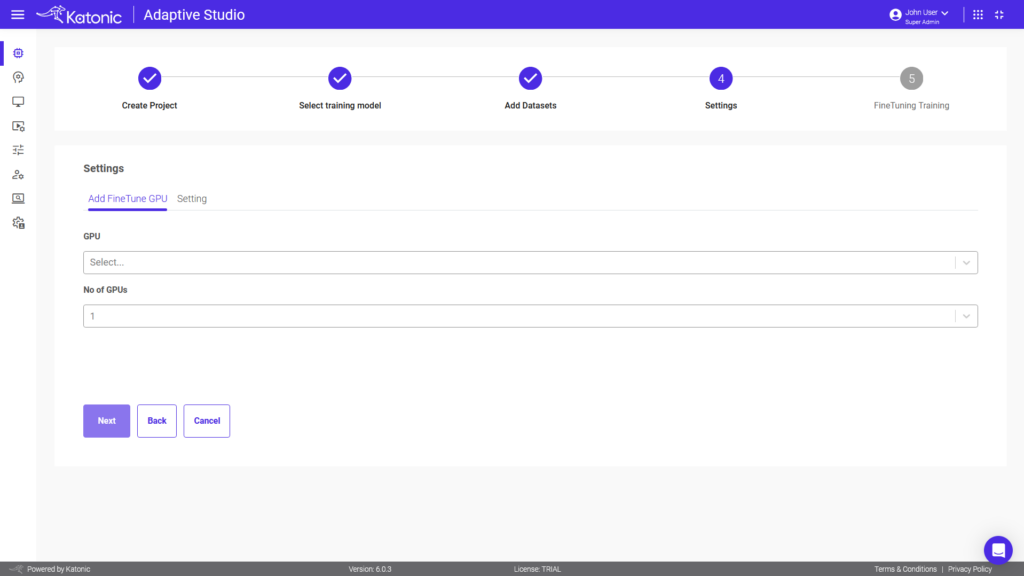

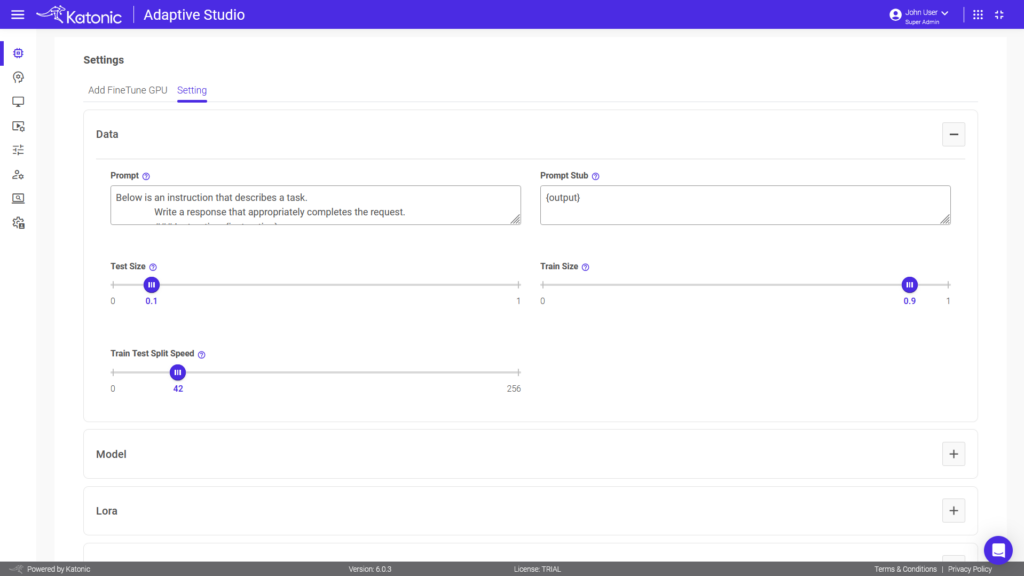

This is where the magic happens, but don’t worry—you don’t need a PhD in machine learning to get it

right.

Key parameters include:

The platform provides sensible defaults, but you can adjust these based on your specific requirements

and dataset characteristics.

Once configured, the system executes fine-tuning through several technical stages:

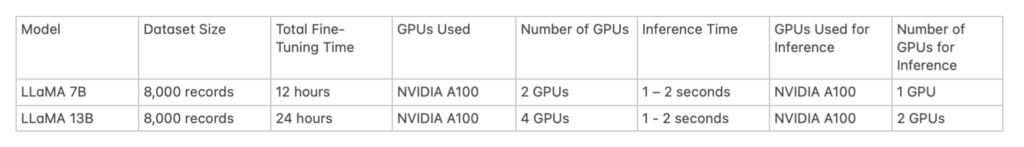

Based on our empirical benchmarking with enterprise datasets, here’s what you can expect:

For 8,000 training records:

These numbers translate to practical business value. One financial services client reduced their document processing time from 45 minutes to under 3 minutes per complex contract review after fine-tuning their model on proprietary legal language.

A telecommunications company fine-tuned their customer service AI on internal knowledge bases and

product documentation. Results:

A manufacturing firm adapted their quality control AI to understand their specific processes and

terminology:

The platform includes access to proven enterprise datasets:

These datasets provide excellent starting points for common business applications whilst you develop

your proprietary training data.

Fine-tuning isn’t just a technical upgrade—it’s a strategic business decision that delivers measurable ROI:

Cost Efficiency: Reduce dependency on expensive API calls to external services Competitive Advantage:

Create AI capabilities your competitors can’t replicate Data Security: Keep sensitive information within

your infrastructure Performance: Achieve domain-specific accuracy that generic models can’t match

Compliance: Maintain control over model behaviour for regulated industries

Ready to transform your AI from generic to genuinely useful? Here’s your action plan:

The Katonic platform makes this entire process accessible through intuitive interfaces—no data science

PhD required. Our Parameter-Efficient Fine-Tuning approach means you can achieve enterprise-grade

results without enterprise-scale computational resources.

Generic AI models were just the beginning. The real transformation happens when AI truly understands

your business, speaks your language, and delivers results tailored to your specific needs.

Fine-tuning is no longer a nice-to-have—it’s becoming essential for businesses serious about AI

transformation. The companies adapting their models today will have significant competitive advantages

tomorrow.

Ready to see how fine-tuning can transform your AI capabilities? Visit www.katonic.ai to book a demo and discover what specialised AI can do for your business.

LLM fine-tuning is the process of adapting pre-trained AI models to specific business domains and use cases whilst preserving their general knowledge. Enterprises need fine-tuning because generic AI models often provide vague, irrelevant responses for industry-specific tasks, proprietary processes, or specialised terminology. Fine-tuning transforms AI from a brilliant generalist to a domain expert that understands your business context.

Based on Katonic’s benchmarking with 8,000 training records, LLaMA 7B models require 12 hours on 2 NVIDIA A100 GPUs, whilst LLaMA 13B models need 24 hours on 4 NVIDIA A100 GPUs. The platform uses Parameter-Efficient Fine-Tuning (PEFT) with LoRA methodology to optimise computational requirements, making enterprise-grade fine-tuning accessible without massive infrastructure investments.

The Katonic platform accepts JSON/JSONL formats with UTF-8 encoding, supporting both question-answering and instruction-tuning implementations. You can use your proprietary data or start with proven datasets like Stanford Alpaca (52K examples), Databricks Dolly (15K human-generated instructions), or Open-Platypus (25K STEM reasoning tasks) whilst developing your custom training data.

Companies typically see significant operational improvements: telecommunications firms report 68% reduction in escalations and 42% improvement in first-call resolution, whilst manufacturing companies achieve 78% defect detection accuracy (up from 54%) and $2.3M annual savings. Fine-tuning delivers cost efficiency, competitive advantage, enhanced data security, superior domain-specific performance, and better regulatory compliance.

Katonic AI's award-winning platform allows companies build enterprise-grade Generative AI apps and Traditional ML models