We have always relied on different models for different tasks in machine learning. With the introduction of multi-modality and Large Language Models (LLMs), this has changed.

Gone are the days when we needed separate models for classification, named entity recognition (NER), question-answering (QA), and many other tasks.

As language models become increasingly common, it becomes crucial to employ a broad set of strategies and tools to unlock their potential fully. Foremost among these strategies is prompt engineering, which involves the careful selection and arrangement of words within a prompt or query to guide the model towards producing the desired response. If you’ve tried to coax a desired output from ChatGPT or Stable Diffusion, then you’re one step closer to becoming a proficient, prompt engineer.

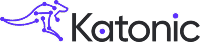

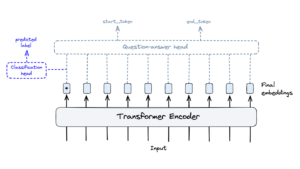

Before transfer learning, different tasks and use cases required training different models. With the introduction of transformers and transfer learning, all that was needed to adapt a language model for different tasks was a few small layers at the end of the network (the head) and a little fine-tuning.

Transformers and the idea of transfer learning allowed us to reuse the same core components of pre-trained transformer models for different tasks by switching model “heads” and performing fine-tuning.

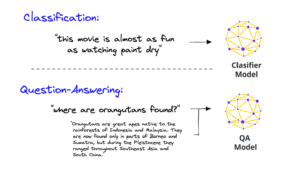

Today, even that approach is outdated. Why change these last few model layers and go through an entire fine-tuning process when you can prompt the model to do classification or QA.

Many tasks can be performed using the same Large Language Models (LLMs) by simply changing the instructions in the prompts.

Large Language Models (LLMs) can perform all these tasks and more. These models have been trained with a simple concept, you input a sequence of text, and the model outputs a sequence of text. The one variable here is the input text — the prompt. This means everyone can now be a computer programmer as all they need to do is speak to the computer.

Everyone is a programmer now – you just have to say something to the computer.

In this new age of LLMs, prompts are king. Bad prompts produce bad outputs, and good prompts are unreasonably powerful. Constructing good prompts is a crucial skill for those building with LLMs.

Here are some tips on how to write effective LLM prompts:

Be specific: The more specific you are in your prompt, the more likely you are to get the results you want. For example, if you want to generate a poem about the beauty of nature, you might use the following prompt:

Code snippet

Write a poem about the beauty of nature, using the following keywords: trees, flowers, and sunshine.

Use keywords: Keywords can help you to narrow down the results and get more specific. In the example above, the keywords “trees,” “flowers,” and “sunshine” help to ensure that the poem generated by the LLM will be about the beauty of nature.

Use adjectives and verbs: Adjectives and verbs can help you to add more detail and realism to your prompts. For example, you might use the following prompt to generate a poem about a forest:

Write a poem about a forest using the following adjectives: lush, green, and mysterious. Use the following verbs: grow, sway, and whisper.

Experiment: There is no one right way to write an LLM prompt. The best way to learn how to write effective prompts is to experiment. Try different things and see what works best for you.

With a little practice, you will be able to write effective LLM prompts that will help you to get the results you want.

As we move forward, building software systems with LLMs will primarily involve writing text instructions. Given the importance of crafting these instructions, it is likely that we will need a subset of the features listed above. However, I believe that it is only a matter of time before many of these features are integrated into existing tools.

In the meantime, it’s important to understand how prompts work and what makes them effective. By knowing this, you will be able to give your models the instructions they need to do their job well. Good luck!