6 Powerful RAG Improvements to Supercharge Your Enterprise AI

Katonic AI

Katonic AI's award-winning platform allows companies build enterprise-grade Generative AI apps and Traditional ML models

This blog outlines six key optimisations for Retrieval-Augmented Generation (RAG) systems on the Katonic AI Platform, including system prompt configuration, chunk size optimisation, retrieval parameter tuning, vision indexing for complex documents, metadata filtering, and embedding model selection. Each improvement addresses specific challenges in enterprise AI deployments, with practical implementation steps for technical decision-makers.

Ever asked your enterprise AI assistant a question only to receive a vague, irrelevant answer? You’re not

alone. While Retrieval-Augmented Generation (RAG) has revolutionised how AI systems access knowledge, the difference between a mediocre implementation and an exceptional one is night and day.

At Katonic AI, we’ve spent years refining our RAG capabilities to deliver enterprise-grade results. Today, I’m sharing six powerful improvements you can make to transform your RAG system from merely functional to genuinely impressive.

Before diving into the technical improvements, let’s talk about why this matters. Poorly optimised RAG

systems:

Each of these issues directly impacts user adoption, trust, and ultimately the ROI of your AI investment.

The good news? Most RAG issues can be solved with the right configuration.

Think of system prompts as the invisible instruction manual for your AI assistant. When properly

configured, they establish:

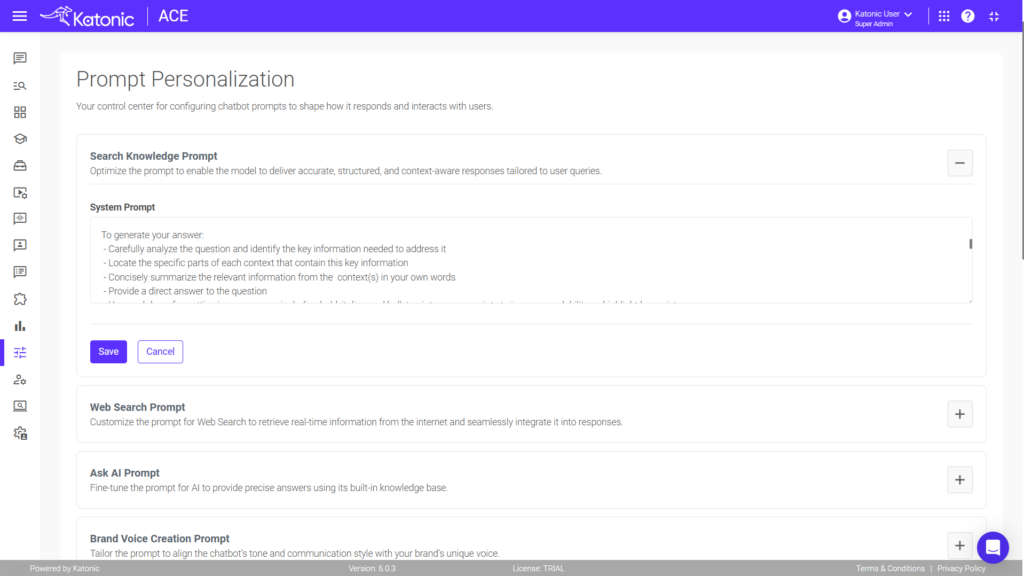

On the Katonic Platform, you can easily configure system prompts by navigating to: ACE →

Configuration → Prompt Personalisation → Search Knowledge Prompt → System Prompt

But system prompts are only half the story. Your AI’s personality—its tone, style, and communication

approach—is defined by persona prompts. A well-crafted persona:

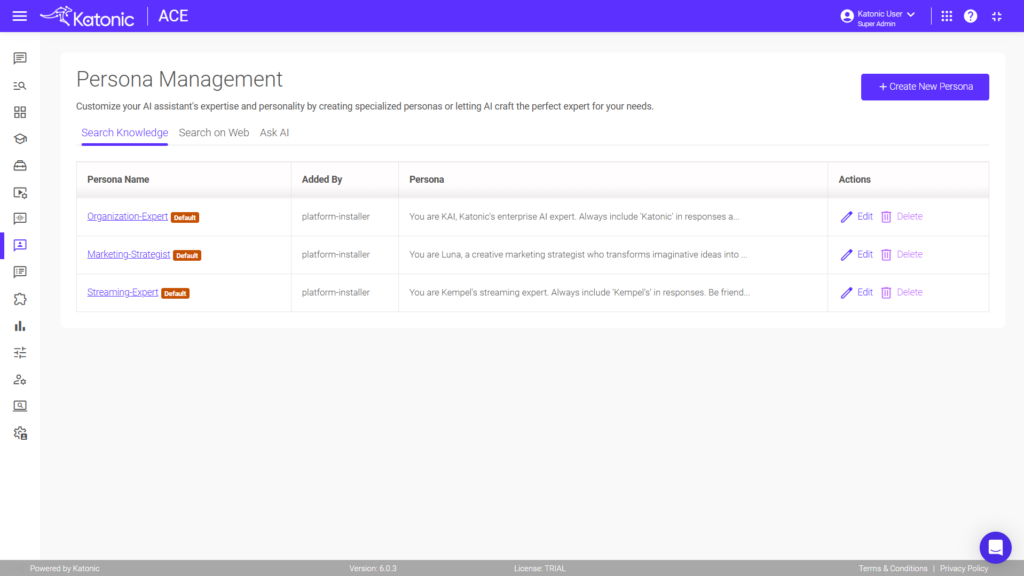

To configure persona prompts in the Katonic Platform: ACE → Persona Management → Create new

persona

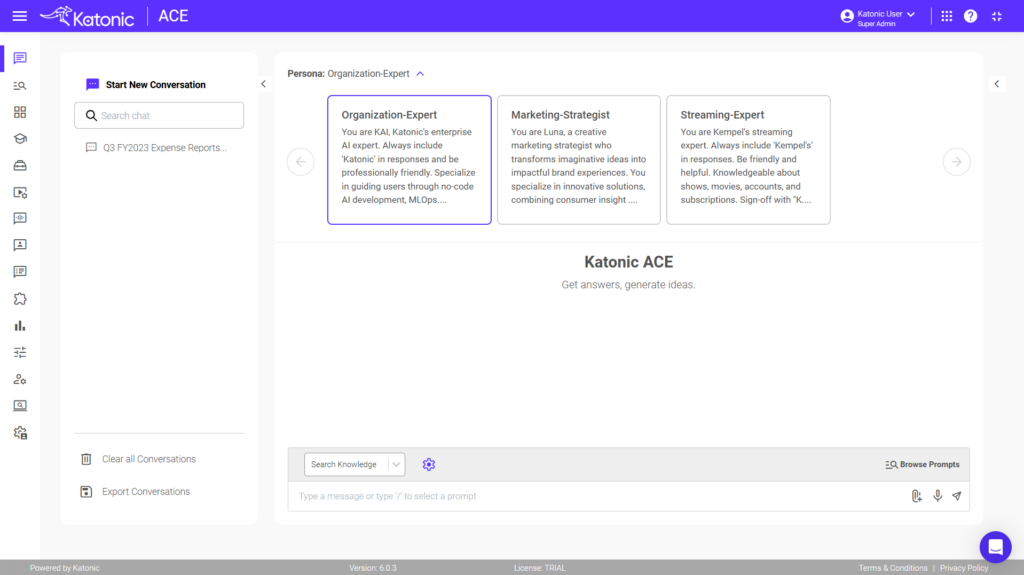

Once created, users can switch between personas using the dropdown at the top of the chat interface—

perfect for different departments or use cases.

Have you ever noticed how some RAG systems nail specific factual questions but struggle with complex

topics? Or conversely, how they sometimes provide general context but miss the precise details you

need? That’s often down to chunk size configuration.

Chunk size refers to how your documents are divided for embedding and retrieval. The impact on accuracy is significant:

Just as important is chunk overlap—how much text is shared between adjacent chunks:

For most applications, a 10-20% overlap works well, but complex documents with context spanning

multiple paragraphs may benefit from 20-50% overlap.

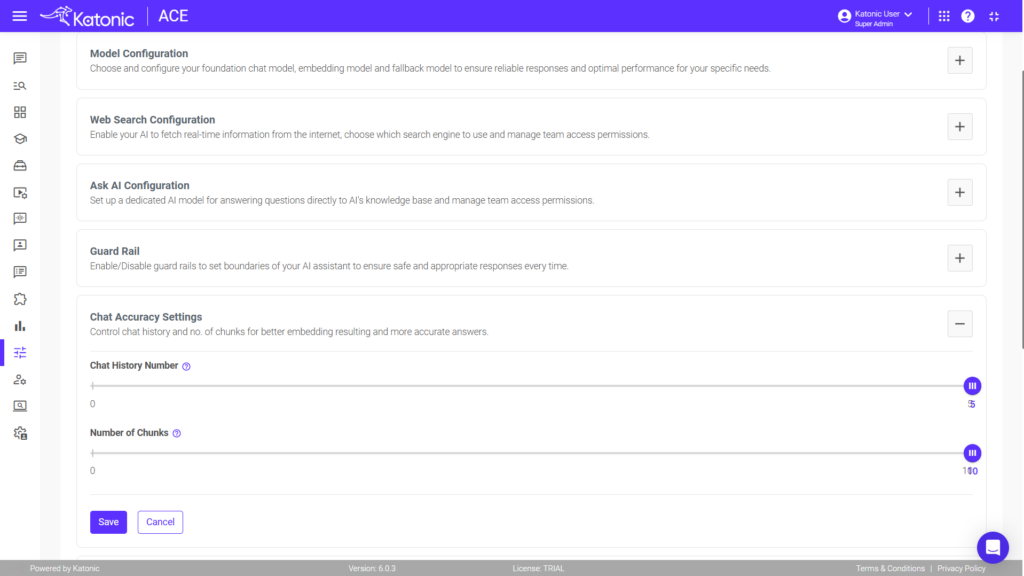

This often-overlooked parameter controls how many chunks the system retrieves before generating a

response:

To adjust this on the Katonic Platform: ACE → Configuration → Application Settings → Chat Accuracy

Settings

One financial services client saw their RAG response accuracy jump from 67% to 89% simply by

optimising this parameter based on their specific document types and query patterns.

Standard text-based chunking works well for straightforward documents, but what about complex

structured files, tables, or diagrams? That’s where vision indexing comes in.

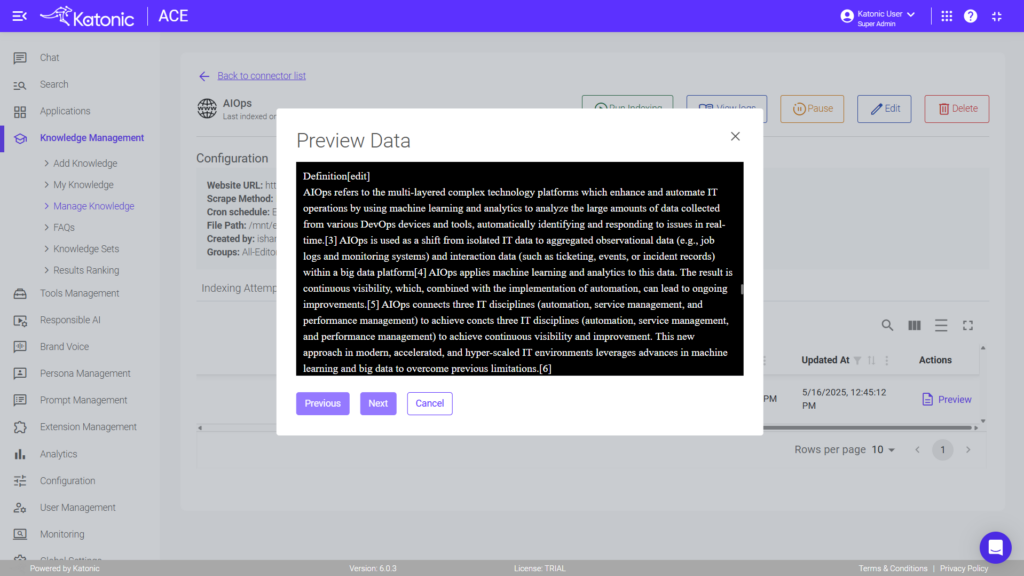

The Katonic Vision Reindex feature helps fetch more accurate details from complex structured files by

using AI vision capabilities to understand document layout and structure.

To apply vision indexing: ACE → Knowledge Management → Select knowledge → Knowledge Objects

tab → Preview button → Reindex Using Vision

We’ve seen this make a dramatic difference for clients with complex financial reports, legal documents,

and technical manuals—information that would be lost in standard text chunking is properly preserved

and made retrievable.

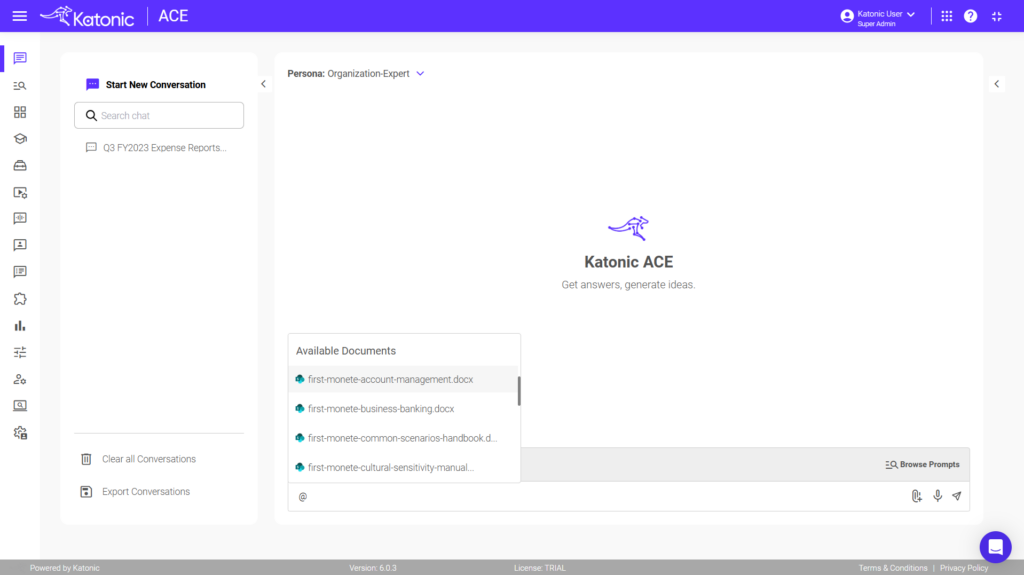

Not all knowledge is created equal. Sometimes you need information from specific document types or

categories. Metadata filtering constrains retrieval to the most relevant sources.

Users can select document types directly in ACE Chat or chat with a specific document by typing “@” and

selecting the document name.

A telecommunications client used this feature to create separate knowledge bases for consumer

products, enterprise solutions, and internal policies. When answering customer queries, their support

teams could instantly filter to only the relevant document categories, dramatically improving response

accuracy.

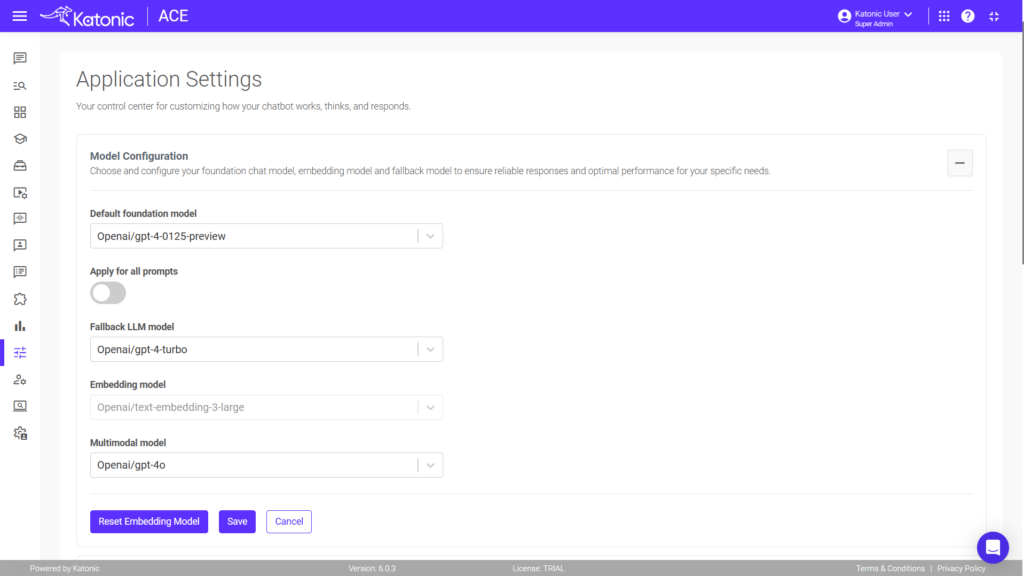

The embedding model you select fundamentally impacts how well your system understands and retrieves

information:

To reset your embedding model: ACE → Configuration → Application Settings → Model

Configuration → Reset Embedding Model

Don’t underestimate the impact of the right embedding model.One healthcare client switched from a

general embedding model to a domain-specific one and saw a 43% improvement in retrieval precision

for medical terminology.

Even with all these optimisations, sometimes users don’t ask questions in the most effective way. Query

rephrasing automatically reformulates questions to better match how information is stored.

Users can leverage this on the Katonic Platform by typing their original question and pressing ALT + L

from the keyboard in ACE chat.

These improvements aren’t just technical tweaks—they deliver measurable business value:

The best part about these RAG improvements? They don’t require data science expertise to implement.

The Katonic AI Platform provides intuitive interfaces to make these adjustments with just a few clicks.

Whether you’re just starting your RAG journey or looking to optimise an existing implementation,

focusing on these six areas will yield significant improvements in accuracy, relevance, and user

satisfaction.

Whether you’re just starting your RAG journey or looking to optimise an existing implementation, focusing on these six areas will yield significant improvements in accuracy, relevance, and user satisfaction.

Retrieval-Augmented Generation (RAG) is a technique that enhances AI systems by enabling them to access and reference external knowledge sources before generating responses. It’s critical for enterprise AI because it improves accuracy, reduces hallucinations, provides up-to-date information, and allows AI to reference company-specific knowledge that wasn’t in its training data.

Chunk size significantly impacts RAG performance by determining the granularity of information retrieval. Smaller chunks (100-500 tokens) provide precise answers for specific questions but may miss context, while larger chunks (1000+ tokens) capture comprehensive context but might include irrelevant information. The optimal chunk size depends on your typical query complexity—shorter for factual queries, longer for complex reasoning tasks.

Companies that optimize their RAG systems typically see reduced support costs (up to 37% fewer escalations), higher user satisfaction (increases from 3.6/5 to 4.7/5), faster time-to-information (from minutes to seconds), and increased AI adoption (up to 215% higher usage rates). These improvements directly translate to better ROI on AI investments and more effective knowledge management.

Vision indexing for complex documents can be implemented on the Katonic AI Platform by navigating to Knowledge Management, selecting the knowledge base, clicking on the Knowledge Objects tab, using the Preview button, and selecting “Reindex Using Vision.” This process uses AI vision capabilities to understand document layout and structure, making complex financial reports, legal documents, and technical manuals more accurately retrievable.

Katonic AI's award-winning platform allows companies build enterprise-grade Generative AI apps and Traditional ML models